Synsis Developer Kit

We built a Developer Kit to accelerate the time-to-market for automotive brands deploying human-state algorithms inside a vehicle. Our tech detected certain human states such as stress, fatigue, comfort, and we also had a two dimensional valence and arousal model for plotting a wider range of emotions. The goal was to launch a product which would gather multimodal data streams, sync and process the data, train the algorithms, and allow R&D teams to test use cases without having to build their own infrastructure.

The second generation Synsis Developer Kit, with customised Raspberry Pi and onboard OBD2 port.

Setting the scene

Sensum had been developing emotion response algorithms and building one-off digital experiences for a number of years. As early pioneers of biometric measurement we worked with partners like BBC, Jaguar, Ford, Ipsos, Red Bull and Unilever to measure and react to human states & emotions in real-time.

These early projects were one-off builds as the technology struggled to find real use cases which would lead to a repeatable revenue model for the business. One of the more promising partnerships with a major extreme sports brand resulted in a successful proof-of-concept phase and commitment to deliver significant investment. All was going well but around a year before we launched the developer kit, at the very last minute they pulled out of the deal with no warning.

At this point the team had a wealth of experience in sensor technology, emotion science and tons of practical experience running data collection studies measuring biometric data. We did this in all sorts of crazy conditions including rigging up a jet pack pilot and, I kid you not, a volcano diver. Watch the video here for proof.

Our mission at this point was to find a route to product which had a realistic chance of success before we ran out of money.

How do you predict human state?

Sensum focused on sensors for measuring the heart rate, breathing rate and skin response of the participant, giving us biometric signals to infer human states or emotions. We conducted rigorous studies to collect this data, label it and use it to design and build algorithms. We also had partnerships with Fraunhofer who specialised in facial coding and Audeering who built algorithms for detecting emotions in the voice.

Transition to machine learning

Before the Developer Kit came along Sensum pioneered a multi-modal approach to inferring human states, requiring sensor inputs from biometrics, face and voice. Machine learning was chosen as the path to build a generalised model with training data collected in the lab.

Our process for modelling human states such as stress and comfort with data collection and validation steps.

Our team of psychologists worked in collaboration with Queen’s University and the French tier 1 automotive supplier Valeo to design a study which would help us to induce a stress > comfort state amongst a broad range of participants. We built a driving simulator to reflect the real driving experience as much as possible. The lab was also kitted out with face cameras, audio recorders and biometric sensors.

Driving simulator built to run user scenario testing and data collection.

After the practical part of the study was complete we gathered self-report data from the participants and also labelled the data using a separate group of people who had no knowledge of the study itself. This provided us with the machine learning inputs we needed to train the initial model. This model we called Synsis.

An example of model inputs and the trained outputs.

Focusing on the problem space

The big question for us was; Is there a thirst for the type of outputs Synsis provides? If so, who wants them, but more importantly who has the sensor technology and conditions that are far enough along they would pay to get their hands on our Developer Kit.

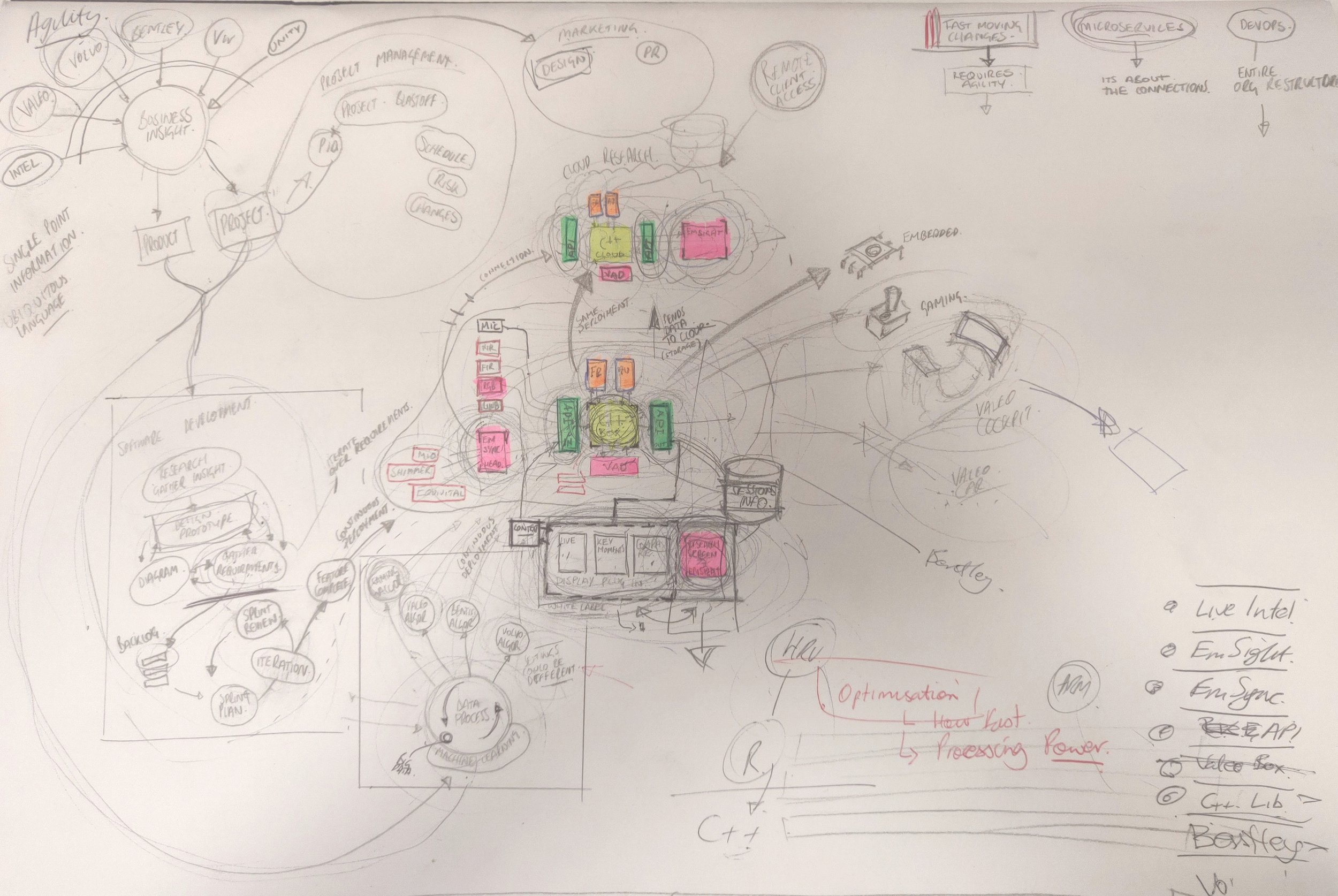

Early architectural sketches to explore the components of the Developer Kit and how it would fit into the wider ecosystem of machine learning model training and data security in the cloud.

It was important to validate, or invalidate, this idea before committing to build anything. Engineering time is expensive and if you are building the wrong thing for the wrong people then the product will not survive. We needed a strategy to help test our assumptions on the Developer Kit, and if there really is an existing problem that it solves.

Persona work with the team, defining our target customers and the role they play.

To gain this feedback we designed an advert promoting the Developer Kit as if it was ready to be sold (see below). We included a feature list and made the design appealing, we knew this would be a springboard into deeper conversations about the Kit whilst also sparking excitement around the product. The advert was sent to various contacts we had developed in the automotive world and through this we got talking to the worlds biggest car manufacturers such as Volvo, Bentley, VW, Lotus, Ford and more.

The result was overwhelming, we got clear indications, especially from the R&D teams, who responded positively to the advert and said “…if it was here today, we would take it.”

Even more importantly this small act allowed us to open up conversations on pricing, priorities and pain points which helped us understand what would be acceptable as a first deployment. We gained confidence from this that we had something of value.

Some of the things we learned from these discussions:

The major automotive research and UX teams found it difficult to test use cases using emotion inference. Even though they had the sensor technology on their roadmaps and the need to enhance and personalise their products they didn’t have the expertise to run studies and build the data pipeline necessary to yield results.

Automotive companies had new biometric sensor technology that even we hadn’t seen until this point, they were non-invasive sensors so scaling up was possible and we could see a route to market for the algorithms in this domain. The sensors were embedded in the seats of the vehicle and worked via radar which meant it could capture data through the clothing whilst the occupant was in the seat.

Some of the more advanced features such as the transmission of data to the cloud and model training were not so important in the MVP, the teams were more focused on having the ability to collect data and test potential use cases.

The flexibility to quickly deploy the Developer Kit inside any vehicle was a well received proposition, setting up a study to collect this type of data would take these teams months if not years to achieve without the Kit.

The major shift towards autonomous and semi-autonomous vehicles was creating a real push to understand the occupants from many different dimensions - safety and enhanced personalisation were the hot topics.

Early white boarding sessions were essential to build collective understanding amongst all parts of the business.

The value in the Synsis Developer Kit is created by speeding up R&D cycles. Collect large data sets, train the algorithm and test potential use cases.

User Story Mapping

Once we had discussed the idea and collected enough information from different players in the automotive space we needed to work out exactly how to slice up the build and prioritise features in order to deliver a reasonable MVP.

Alignment was key to this activity and getting everyone involved across engineering, data science, psychology, sales and marketing helped to build collective understanding whilst also developing solid insight into the best way to slice up the work and deliver an early MVP.

User Story Mapping is a great exercise for slicing up the backlog in an interactive and collaborative way. We were able to explore and vote on key features we felt we needed to include.

We used the information we got from the automotive brands to validate some of our assumptions, the extra stuff that they told us proved more interesting and enabled us to prioritise the features of the MVP in a way we would not have done otherwise. We could then arrange this into, what we felt, was the minimum amount of features required to deliver the build and get it into the hands of the automotive teams as early as possible.

Workshops were a core part of building alignment across the different disciplines involved. Teams build great software.

The MVP

This was not going to be a simple product to build so we needed to harness all of the experience we had nurtured in the team over years of working with biometric data. The most important part of the product was the capture and synchronisation of data from the sensors, this was crucial. If the kit failed in the capture or sync of the data streams we could not use it for model training or driving actuation events for testing use cases.

For the MVP sync and capture would be the non-negotiable features that needed to be robust; downstream we didn’t need to be as precise and could afford more trade-offs.

The Sensors

We utilised three different types of sensor, the most important being the biometric sensors to capture heart rate and breathing rate data. In order to capture the facial expressions of the driver we sourced an in-cabin face camera mounted on the windshield, we also had a front facing camera to record the trip as seen by the driver for context. Lastly we had a microphone to record any voice data from the driver talking.

The suite of sensors used to collect the data. These included from the left video cameras for in cabin and external capture, microphone for voice and the biometric sensors on the right, including the chest strap and radar sensors.

Hardware

We wanted to get something feasible into the hands of the automotive companies as quickly as possible so we used off-the-shelf hardware to save time and prove the concept. A laptop, battery pack, router and the sensors were packaged up with the kit.

The first version MVP of the Synsis Developer Kit using existing technology including a laptop, battery pack, router, cameras & biometric sensors.

The Kit would be controlled via a user interface which got displayed on a tablet in the front of the vehicle. The tablet could be mounted for just the driver, although if there was a research associate as a passenger they could hold the tablet to control the Kit or keep it positioned in the mount as well.

The Kit was controlled via a mounted tablet running a react web app making it easy for non-technical users to run sessions.

Software

The software component to the kit had to be all on device, we could not depend on external connections. Keeping the system closed meant we could secure the data on the device and also avoid running into any connectivity issues during data collection.

On the first version of the MVP the software would be deployed to a laptop and we would use a tablet device running our UI connected through a local network to control the operation of the Kit.

Early beta interface

Creating a full working kit for internal use early helped us test the installation, operation and feedback on any issues as we continued development. Many of the features were not fully formed yet but we were able to run end-to-end by putting together a really rough interface for our trial runs. This allowed us to hook up some of the data to the front-end and solve problems as they occurred during the development process.

In order to run the Kit early and experiment with potential interface elements including the charting of data we used some elements we had built for another project and also mixed in other off-the-shelf components.

With a rigorous testing plan we ran the kit in different conditions with different cars and a variety of users. We repeated this process every development sprint to log any issues which occurred either during installation or operation of the Developer Kit.

Using sensor technology and network communications in this type of product creates a number of challenges for the engineers, feedback on a regular basis was crucial to help us catch any sensor dropouts or data synchronisation issues. We were able to rectify and test these changes during subsequent sprints.

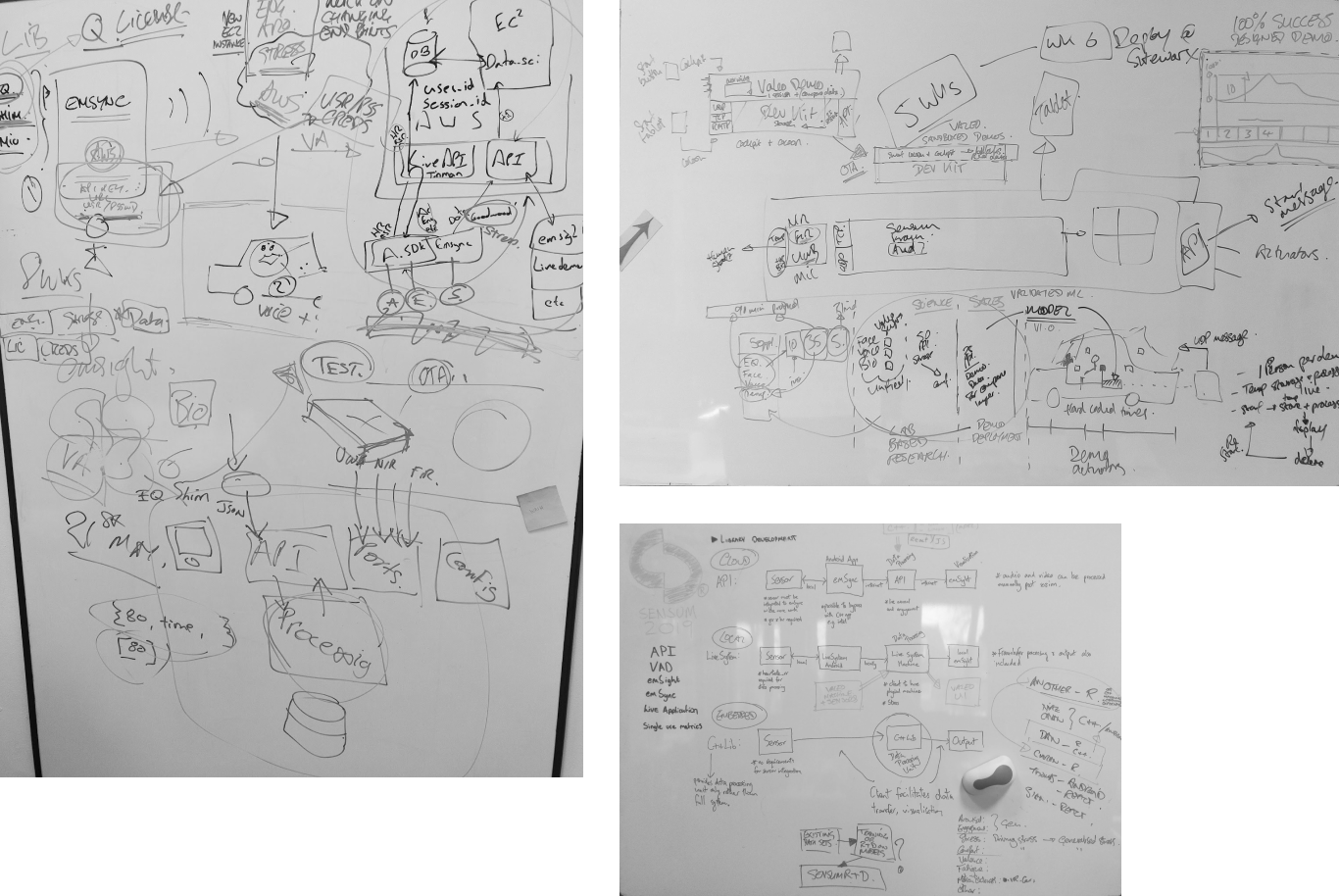

The interface architecture formulated from our early experiments with the Kit in testing.

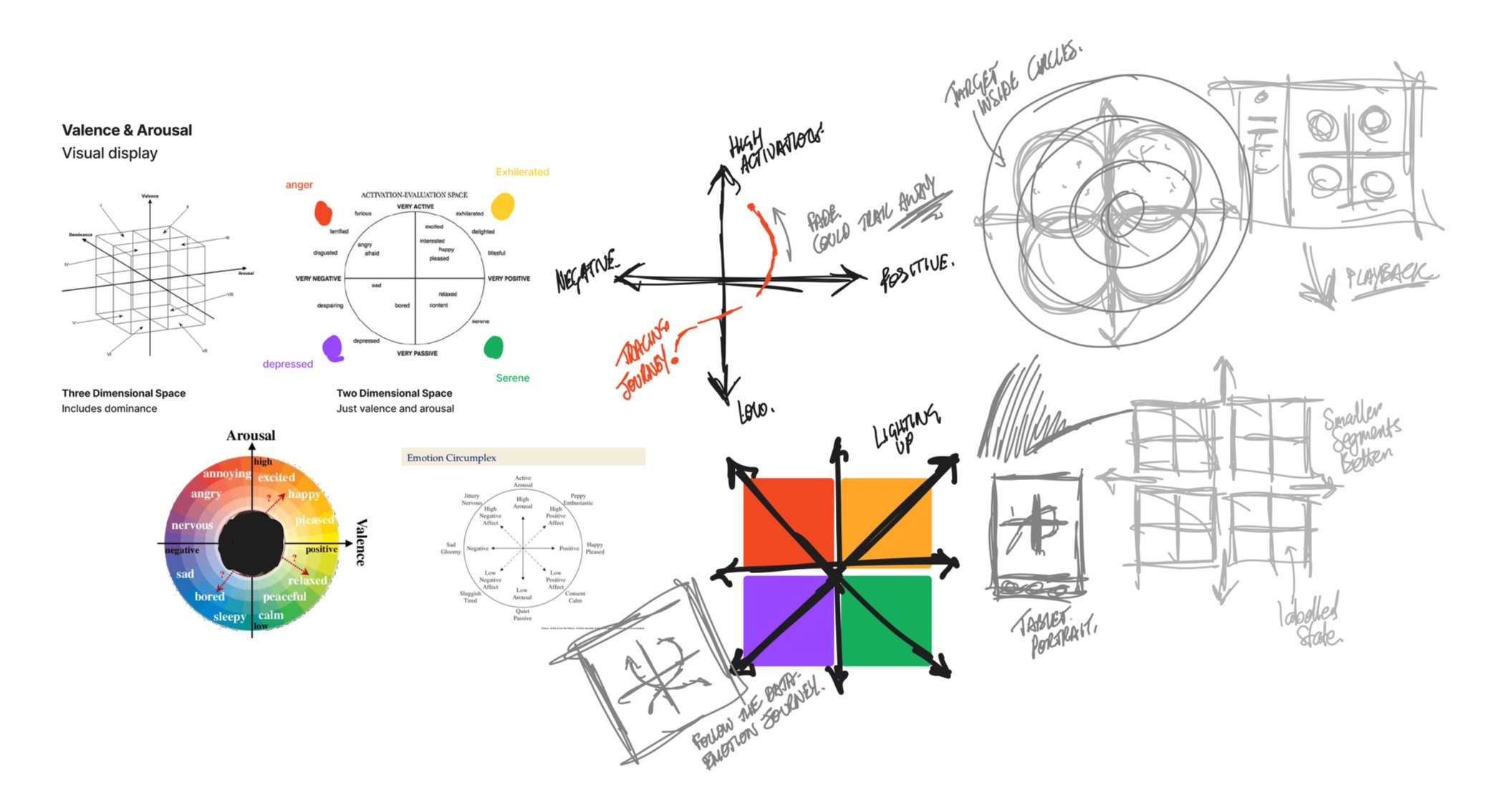

Interface Sketches

Using the initial architecture diagrams we worked together as a team to develop rough sketches of the components and screens we would need to deliver to build the user interface for the product.

Working in fast iterations and sketching potential solutions early in the UI design helps to refine the requirements.

I like to get a cross section of the entire team involved in this part of the process, we can iterate and discuss ideas, constraints and functionality visually. This is a much better way to document decisions early as it enables easier communication with other stakeholders, just pulling out a sketch and discussing the concepts helps refine the requirements even more.

Early concepts for displaying our human state algorithm using a valence and arousal 2 dimensional model. Online whiteboards are great for collaborating as a team, its easy to generate ideas and paste imagery to discuss and refine. With more remote working these types of applications are great to progress ideas in alignment with the rest of the team.

Ultimately this interface needed to be simple, for a first deployment it was necessary to focus on essential features only. Even though we had good input with some of our customers we couldn’t be completely sure what was needed or what was merely a nice-to-have. Our approach was to keep the interface as tight as possible and only build what was required for collecting and displaying the data accurately.

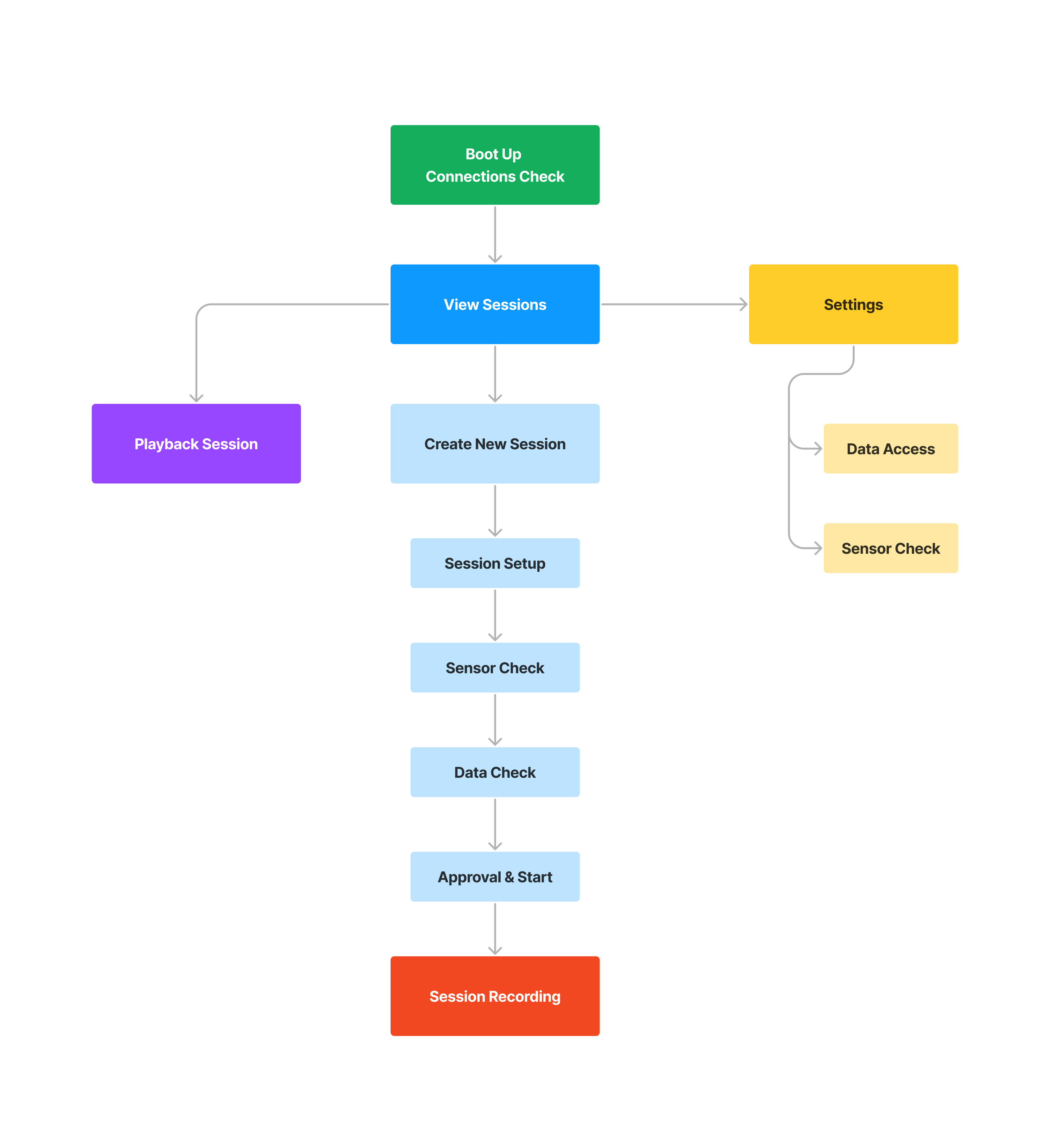

Low-fidelity prototype

During this phase we needed to work out the placement of features and the flow of the app, we used a very light wireframe style to communicate this to the rest of the team. We went on to create and adjust these prototypes at speed in order to gather feedback during each phase. With the prototypes being low-fidelity it also helped to focus peoples attention on the fundamental arrangements and functions of the interface rather than be caught up in design decisions too early.

These low-fidelity prototypes were great for opening up conversations with the engineering team, keeping engineers in the loop and making sure we understand their constraints is crucial when building software. We could also communicate our intent to other stakeholders who were in various ongoing sales and marketing tasks in preparation for the launch of the Kit.

The insight we gained through discussing the Developer Kit with customers early proved very useful and it also gave us contacts we could use again to discuss the initial prototype, we were able to get a few sessions arranged to discuss the operation of the Kit.

Some interesting points were raised during testing around the potential use in the vehicle.

How would the tablet be secured in the vehicle.

The need to minimise distractions for the driver, consider sound and animation that could distract rather than aid the driver.

Keeping the UI during the driving phase simple and easy to operate so it could be done by the driver alone. Also dark colours are good backgrounds in heads-up displays to avoid unnecessary light and distraction.

Downstream these type of insights proved invaluable when it came to the final design of the interface.

High-fidelity design

At this stage of the product lifecycle focusing too much attention on perfecting an interface could push us into corners and waste time. Using off-the-shelf frameworks and components was perfect to develop most of the components and screens we required.

We did have to custom develop the data display when the Kit was recording a session due to the unique scenario.

Design for the main heads-up display on the tablet. This required a custom design to fit the use case and also represent a different type of data.

We knew that the Kit was going to be deployed and used for individual driver testing. This required us to consider the design from this perspective. If the driver was going to be the subject of the data collection then we needed to make it easy to use, and give simple feedback. A dark background would cut down glare and reduce distraction. Large display components for the biometric data would flag up any issues or anomalies so decisions could be made on whether to continue the recording or abandon the data collection.

Final on the road testing

We had been running versions of the Kit during the entire development lifecycle to mitigate any big surprises as it came closer to the release date. These sessions over the course of the build were invaluable for solving potential problems as we progressed, sensor issues, data synchronisation problems and difficulty setting up the Kit inside the vehicle could all be solved as we progressed. This left us in a strong position as we came to final on the road testing and validation.

First test runs with the kit in real driving situations. We wanted to look at the quality of data capture and test the operation of the system from end-to-end.

We had a QA process in place for hardware, software and the accuracy of the data collection. We needed to be very thorough in the testing phase especially with the data collection, setting up and running these studies was time consuming and any drop out of data or synchronisation issues could ruin the whole sessions worth. We ran multiple different routes in different cars with different drivers to test the system throughout.

Early promotion video of the Synsis Developer Kit, one of the key selling points of the kit was how fast you could be collecting and experimenting with human-state data.

First deployments to customers

One of the core value propositions for the product was the amount of time and money it would save research teams throughout the car industry, we played on this heavily and it worked. For the MVP version of the kit we captured deals from Volvo, Bentley, Lotus and Valeo. This was crucial to get a real feedback loop in place in order to begin iterating on the product.

Demonstrating an early prototype version of the Synsis Developer Kit to automotive executives at CES in Las Vegas.

The Kit was a solution to a difficult, time-consuming problem that these automotive teams didn’t have the time or experience to solve. The Synsis Developer Kit gave these companies the tools to experiment with human reactive models out of the box, and iterate faster compared to their competitors.

The team running tests inside the Valeo wind tunnel in Paris. We were specifically looking at the effects of temperature on the level of comfort experienced inside a vehicle.

Building the feedback loop

Due to rigorous dogfooding of our own product we had a good idea of the problems we knew we would need to solve for the next version of the Kit, these we formed into hypotheses and we used the initial MVP deployments to open up conversations and try to validate or invalidate some of these.

Our team participated in observational studies to determine what problems these customers faced setting up and using the Synsis Kit. After a trial run we also conducted interviews with their team members to get individual feedback and log any problems they had using the Kit.

A big take away from this type of a process is the fact that some features that we felt were crucial were not as much of a priority to the automotive companies. This meant we could collect the feedback and focus our attention on the main improvements that would move the needle for our early adopters, in turn this would make the Kit more attractive to other potential customers.

What we learned

The Kit was cumbersome to move around, reliance on the battery to operate the sensors and hardware made the Kit heavy to transport. Getting rid of the battery pack was a big priority for the next iteration.

There were too many moving parts and wires which made the setup and running of the Kit temperamental. When the Kit decided to play up it would take a bit of fiddling with wires or reconnecting routers to get it operating again. The solution had to be cutting back on hardware and reducing the amount of connections to improve reliability.

We needed to get more context data from the vehicle, the MVP used some data from the tablet to get a rough sense of speed but it didn’t work all of the time and was dependent on GPS. If we could access the CAN bus and grab the vehicle data it would help us correlate speed, location or braking against the emotional state data from the driver.

Synsis Developer Kit v2

The MVP did its job nicely, we got real feedback from real ‘paying’ customers which is always the best way to learn about priorities going into the next phase of development. We knew we needed to scale back the hardware by reducing the Kit down onto a lower powered device, for this we decided to go for a modified Raspberry Pi with a custom OBD2 connection mounted on a secondary board.

The OBD2 port would allow us to power the device from the car which meant we could ditch the battery which was one of the main issues for our early adopters. This port would not only power the Pi but would capture speed, acceleration, braking and other data from the CAN bus on the vehicle.

The main Raspberry Pi board, sitting on top is the modified Raspberry Pi unit with the OBD2 port connection on the right hand side. Early sketches for the case design in the background.

Deployment to the Raspberry Pi required a major refactor of the back-end, simplifying and optimising the code was crucial but also making sure our third-party dependencies could run on the device as well. It was a significant challenge for our engineers as they had to switch database technology and deploy different library versions of the facial coding and audio analysis software to work on Pi.

The final product inside a custom designed, 3D printed case.

The 2nd generation Kit was a massive step up, getting rid of the battery and the laptop so we could run everything on the Pi allowed us to remove a significant amount of wiring. Being able to package up the sensors and deliver a handheld device that easily plugs into the vehicle was exactly what our customers needed; simple, light and easier to transport.

The code improvements also allowed us to gain momentum in our roadmap towards an embedded algorithm on chip, a lot of the decisions we made to support the Pi would translate well to this type of deployment.

Outcomes

Volvo UX team who took both generations of the kit had plans to integrate the containerised software into their fleet of autonomous vehicles which they used for testing.

We deployed the Kit into four more large automotive brands and tier one suppliers, working closely with them to gather feedback which was used to inform the roadmap and develop the next generation of the Developer Kit.

Other domains such as gaming and surprisingly psychological research institutes were keen to get their hands on the product. We had planned a desk version of the device which would not have the automotive specific elements such as ODB2 port, this would have been perfect for these facilities.

It all looked so good. What happened?

We had built a great customer base including Valeo, Bentley, Volvo and Lotus. The Kit was well received and we had also just upgraded everyone to the new 2nd generation version. Everything was going along nicely and we had more interest from other markets.

Then the covid shutdown occurred! In a matter of a few months the automotive sector was completely upended and innovation budgets were decimated, our ability to expand was severely cut back. Our investors got cold feet at this point and we new our runway would be in the months. We fought hard but it was not to be, we admitted defeat and the company was unfortunately closed around March 2021.

Lessons learned

This was a complicated product with a lot of moving parts; sensors, hardware, software, interface, data. Taking a systems thinking approach and using customer input and visual artefacts meant we could build shorter feedback loops which informed our decisions as we progressed.

Big data is damn hard to collect and annotate for this type of product. It takes a phenomenal amount of time and energy to validate, annotate and then feed back into the training cycle.

The company should have moved to this type of product much earlier in their life cycle, they relied on service style ‘proof of concept’ projects for too long.

Dependencies weakened our offering, the reliance on partner technologies such as sensors, facial coding and audio analysis caused integration complications and scaling issues with the product.

You can never plan for all eventualities and when it came down to it our technology was just not solving a needy enough problem for automotive companies to justify the cost during difficult times. The Developer Kit was more of a tool to help get to scale through licensed, embedded algorithms.